You are here: Foswiki>DaqSlowControl Web>HadesDaqDocumentation>DetectorControlSystem>SCSArchiverCSStudio (2022-11-16, PeterZumbruch)Edit Attach

RDB Archiver based on CS-Studio

Table of Content (short)

|

Detailed Table of Content

|

%FOREACH{"css-address" in="https://github.com/ControlSystemStudio/cs-studio"}%

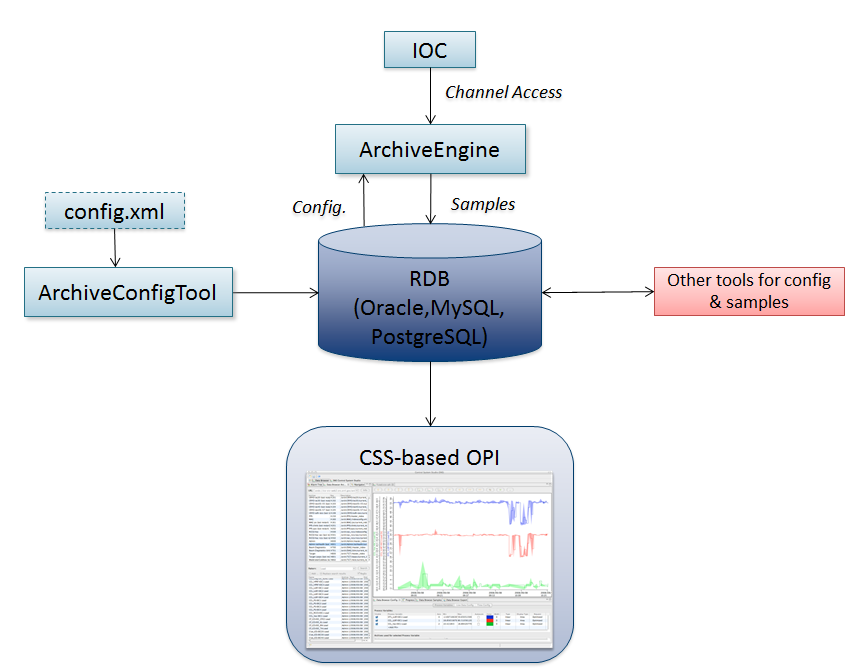

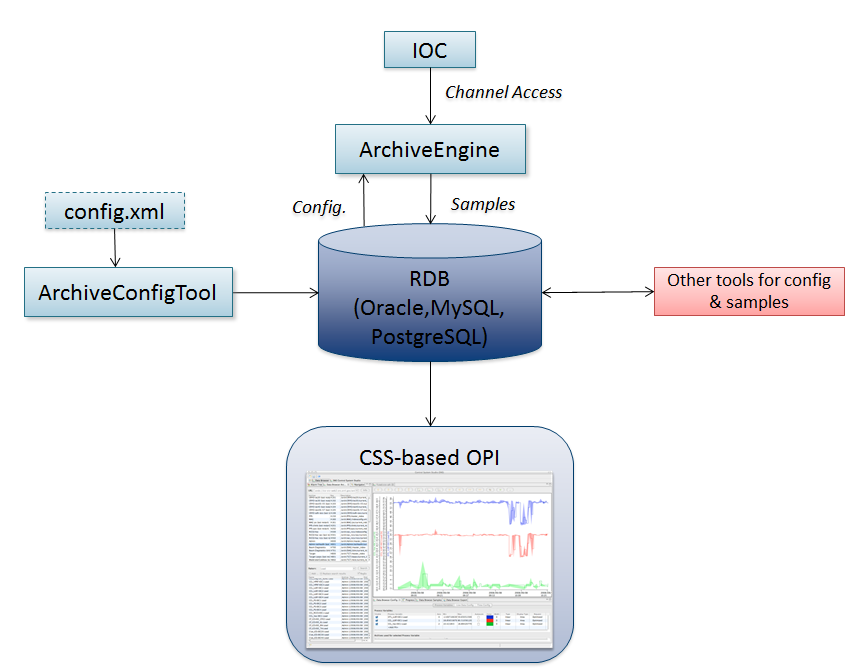

(from http://cs-studio.sourceforge.net/docbook/archive.png)

The CS-Studio RDB Archiver Engine archives EPICS Process Variables into an RDB (PostgreSQL, MySQL, Oracle). Stored data can be retrieved via CS-Studio RDB Archive Reader or any other SQL aware application.

Make sure the engine port is the same as defined in the configuration, otherwise you might get something like this - (mismatch of defined 4812 (default) and requested 4912):

%CODE{"java"}%

2016-06-02 15:56:51.010 INFO [Thread 1] org.csstudio.archive.engine.Application (start) - Archive Engine 4.0.0.201506301920

2016-06-02 15:56:51.233 INFO [Thread 1] org.csstudio.archive.engine.server.EngineServer (

Make sure the engine port is the same as defined in the configuration, otherwise you might get something like this - (mismatch of defined 4812 (default) and requested 4912):

%CODE{"java"}%

2016-06-02 15:56:51.010 INFO [Thread 1] org.csstudio.archive.engine.Application (start) - Archive Engine 4.0.0.201506301920

2016-06-02 15:56:51.233 INFO [Thread 1] org.csstudio.archive.engine.server.EngineServer () - Engine HTTP Server on http://localhost:4912/main

2016-06-02 15:56:51.233 INFO [Thread 1] org.csstudio.archive.engine.Application (start) - Reading configuration 'HadesArchiveEngine'

2016-06-02 15:56:51.858 SEVERE [Thread 1] org.csstudio.archive.engine.Application (start) - Cannot read configuration

java.lang.Exception: Engine running on port 4912 while configuration requires http://localhost:4812/main

%ENDCODE%

The command

script based using

Download this small bash script https://git.gsi.de/EPICS/Tools/scripts/Archiver/restartChannelArchiverArchiveEngine

Introduction

(from http://cs-studio.sourceforge.net/docbook/archive.png)

The CS-Studio RDB Archiver Engine archives EPICS Process Variables into an RDB (PostgreSQL, MySQL, Oracle). Stored data can be retrieved via CS-Studio RDB Archive Reader or any other SQL aware application.

Documents

Setup / Installation

Needs:- Database configuration / optional installation

- Archiver Engine configuration

Database

%FOREACH{"file" in="postgres_schema.txt"}%

%FOREACH{"path" in="master/applications/archive/archive-plugins/org.csstudio.archive.rdb/dbd"}%

%FOREACH{"prefix" in="SCSArchiverCSStudioPostgreSql"}%

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioPostgresDatabaseConfigurationLocal <a href="/edit/DaqSlowControl/SCSArchiverCSStudioPostgresDatabaseConfigurationLocal">edit</a></div> %FOREACH{"css-address" in="https://github.com/ControlSystemStudio/cs-studio"}% %FOREACH{"file" in="postgres_schema.txt"}% %FOREACH{"path" in="master/applications/archive/archive-plugins/org.csstudio.archive.rdb/dbd"}% %FOREACH{"prefix" in="SCSArchiverCSStudioPostgreSql"}%

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioPostgresDatabaseConfigurationLocal <a href="/edit/DaqSlowControl/SCSArchiverCSStudioPostgresDatabaseConfigurationLocal">edit</a></div> %FOREACH{"css-address" in="https://github.com/ControlSystemStudio/cs-studio"}% %FOREACH{"file" in="postgres_schema.txt"}% %FOREACH{"path" in="master/applications/archive/archive-plugins/org.csstudio.archive.rdb/dbd"}% %FOREACH{"prefix" in="SCSArchiverCSStudioPostgreSql"}%

PostgreSQL hosted by GSI central services

GSI PostgreSQL administrative contact: T.Badura@gsi.de

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioConfigurationScripts <a href="/edit/DaqSlowControl/SCSArchiverCSStudioConfigurationScripts">edit</a></div>

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioConfigurationScripts <a href="/edit/DaqSlowControl/SCSArchiverCSStudioConfigurationScripts">edit</a></div>

%NEXT{"prefix"}%

%NEXT{"path"}%

%NEXT{"file"}%

Role/User/Permissions

| hades_scs_css_archive_read | pw: k2E4g7D1c8 | |

|

|

||||||

| Hostname Port | pgsqltest.gsi.de 6846 |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Database | hades_scs_css_archive |

css_ee |

||||||||

| permissions | permissions | |||||||||

| user/role | read | write | admin | read | write | admin | ||||

| pzumbruch | |

|

|

|

|

|

||||

| "p.zumbruch" | |

|

|

|

|

|

||||

| epics | pw: m7Z6a1B7g2 | |

|

|

||||||

| hades_scs_css_archive | |

|

|

|||||||

| hades_scs_css_archive_admin | |

|

|

|||||||

| "m.traxler" | |

|

|

|||||||

| "j.adamczewski-musch" | |

|

|

|||||||

Configuration

| Hostname | pgsqltest.gsi.de |

||

|---|---|---|---|

| Port | 6846 |

||

| Name | hades_scs_css_archive |

css_ee |

|

| SSL | |||

| based on | CS-Studio provided postgres scheme (raw) (local) and CS-Studio provided partitioning (raw) (local) | ||

Configuration - Load Schema

hades_scs_css_archive

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioConfigurationScripts <a href="/edit/DaqSlowControl/SCSArchiverCSStudioConfigurationScripts">edit</a></div>

- Hostname

- pgsqltest.gsi.de

- Port

- 6846

- Database

- hades_scs_css_archive

- Schema

- hades_scs_css_archive

- Scripts

- Create Database, Users, and Scheme

-

<div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioPostgresCreateDatabaseAndScheme</div>

- database_owner_ship provided:

- add

client_encodingandSEARCH PATH - if database does not exist, create it

- call

pqslwith- option

"-f"loading modified partitioning - option

"-a"to show all

- option

- add

create database DO $$ BEGIN IF NOT EXISTS( SELECT datname from pg_catalog.pg_database where datname = \'$PGDATABASE\' ) THEN EXECUTE \'CREATE DATABASE $PGDATABASE;\' END IF; END $$; \connect $PGDATABASE;

create roles / users DO $$ BEGIN IF NOT EXISTS( SELECT usename from pg_user where usename = \'$PG_Archiver\' ) THEN EXECUTE \'CREATE USER $PG_Archiver with PASSWORD \'$PG_Archiver_pw\'\'; EXECUTE \'ALTER USER $PG_Archiver with PASSWORD \'$PG_Archiver_pw\'\'; END IF; IF NOT EXISTS( SELECT usename from pg_user where usename = \'$PG_Reporter\' ) THEN EXECUTE \'CREATE USER $PG_Reporter with PASSWORD \'$PG_Reporter_pw\'\'; EXECUTE \'ALTER USER $PG_Reporter with PASSWORD \'$PG_Reporter_pw\'\'; END IF; END $$; -- create scheme DO $$ BEGIN IF NOT EXISTS( SELECT schema_name FROM information_schema.schemata WHERE schema_name = \'$schema\' ) THEN EXECUTE \'CREATE SCHEMA $schema\'; EXECUTE \'GRANT USAGE on SCHEMA $schema to $PGUSER\'; END IF; END $$; -- SET SEARCH_PATH TO "$schema"; EOF cd ${outputdir} && unset PGDATABASE && psql -U PG_Owner -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE% - database_owner_ship provided:

- Load Scheme

-

<div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioLoadScheme</div>

- Download

postgres_schema.txtfrom cs-studio@github - Since GSI already created the database, skip

DROP DATABASE,CREATE DATABASE - add

client_encodingand "SEARCH PATH" in front - call

pqslwith- option

"-f"loading modified schema - option

"-a"to show all

- option

export PGHOST=pgsqltest.gsi.de export PGPORT=6846 export PGDATABASE=hades_scs_css_archive schema=hades_scs_css_archive URL=$css-address/raw/$path/$file filename=$(basename $URL ) outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF

SET client_encoding = "UTF-8"; SET SEARCH_PATH TO "$schema"; EOF cd ${outputdir} && wget -N --no-check-certificate ${URL} && grep -v "DROP DATABASE" ${filename} | grep -v "CREATE DATABASE" | grep -v -w "\\connect" >> ${outputfile} && psql -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE%-

optionally: to speed up in favour of neglecting consistency, you could drop the

FOREIGN KEYconstraints of thesampletable:

%CODE{"bash"}% export PGUSER=export PGHOST=pgsqltest.gsi.de export PGPORT=6846 export PGDATABASE=hades_scs_css_archive schema=hades_scs_css_archive outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF

SET client_encoding = "UTF-8"; SET SEARCH_PATH TO "$schema"; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_status_id_fkey" CASCADE; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_severity_id_fkey" CASCADE; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_channel_id_fkey" CASCADE; EOF cd ${outputdir} && psql -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE% - Download

- Partitioning

-

NOTE: alternatively, if applicable

pg_partmanExtension to manage partitioned tables by time or ID - not yet tested <div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioPostgresPartitioning</div>- database_owner_ship provided:

- Download

postgres_partitioning.txtfrom cs-studio@github - Since the table

sampleis already created, optionally includingFOREIGN KEYconstraints,- skip

DROP TABLE IF EXISTS sample, though you will run into an error

- skip

- replace

archive.prefixes by "$schema." prefix - add

client_encodingandSEARCH PATHin front - call

pqslwith- option

"-f"loading modified partitioning - option

"-a"to show all

- option

- Download

- Note

- if

psql -U $(PG_Owner)does not work,unset PGHOST;

%CODE{"bash"}% -- This maintenance function automatically creates partitions according to the -- specified interval (e.g. weekly or monthly). The first partition starts at --and ends a day/week/month/year later. This function has to be -- called regularly (e.g. daily by cron): -- -- @daily psql -a -h pgsqltest.gsi.de -p 6846 -U <database_owner, e.g. "postgres"> -d hades_scs_css_archive -c "SELECT hades_scs_css_archive.sample_update_partitions('2015-01-01'::timestamp, 'hades_scs_css_archive', '<database_owner, e.g. "postgres">', 'week');" -- -- This function is based on a more generic version by Nicholas Whittier -- (http://imperialwicket.com/postgresql-automating-monthly-table-partitions). %ENDCODE% ⇒ Install a cronjob on any relevant machine using .pgpass(q.v. Psql pgwiki.gsi.de #.pgpass)-

- (german) note about the credentials

- ... da Sie vorhaben, "psql" für Ihren CronJob zu nutzen, können Sie alle relevanten PostgreSQL-Connection-Daten in Shell-Variablen hinterlegen. Im pgWiki finden Sie dazu eine Anleitung / Empfehlung von uns, wie so ein PostgreSQL-Environment aussehen könnte. Es gibt auch die Datei ".pgpass, wo Sie Passwörter für Ihre Verbindungen hinterlegen können. 1x hinterlegt, bezieht "psql" standardmäßig aus dieser Datei die passenden Passwörter. Wichtig ist nur, dass CronJob unter dem User ausgeführt wird, bei dem die Datei .pgpass hinterlegt ist.

Sie können Ihr Environment sogar soweit "vorbereiten", dass Sie sogar den DBNamen, den Ufernamen, Port, Server, etc. bei Ihrem Cron-Aufruf nicht mehr mit angeben müssen. Die Environment-Variablen geben "psql" vor, wie und wohin er sich per Standard connecten soll.

- database_owner_ship provided:

- Grants

-

<div style="text-align:right" style="font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioLoadSchemeGrants</div>

- Expect

postgres_schema.txt - Extract

GRANTcommands and for each user add those GRANTs - add

client_encodingand "SEARCH PATH" in front - call

pqslwith- option

"-f"loading modified schema - option

"-a"to show all

- option

export PGHOST=pgsqltest.gsi.de export PGPORT=6846 export PGDATABASE=hades_scs_css_archive schema=hades_scs_css_archive URL=$css-address/raw/$path/$file filename=$(basename $URL ) outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF --- --- SET client_encoding = "UTF-8"; SET SEARCH PATH TO "$schema"; EOF cd ${outputdir} && wget -N --no-check-certificate ${URL} && for item in $(echo $archive); do perl -ne 'if (/^\bGRANT\b/ ... / TO (\w+);/m) {~s/^-- .*?\n//g; ~ s/\n/ /g; ~s/;\s*/;\n/g; print;}' ${filename} | perl -ne "if (/TO archive/) {~s/TO archive/TO $item/g;print;}" >> $outputfile done for item in $(echo $report); do perl -ne 'if (/^\bGRANT\b/ ... / TO (\w+);/m) {~s/^-- .*?\n//g; ~ s/\n/ /g; ~s/;\s*/;\n/g; print;}' ${filename} | perl -ne "if (/TO report/) {~s/TO report/TO $item/g;print;}" >> $outputfile done psql -a -f $outputfile && cd - && rm -ir ${outputdir} && unset outputdir outputfile archive report %ENDCODE% - Expect

css_ee

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioConfigurationScripts <a href="/edit/DaqSlowControl/SCSArchiverCSStudioConfigurationScripts">edit</a></div>

- Hostname

- pgsqltest.gsi.de

- Port

- 6846

- Database

- css_ee

- Schema

- css_ee

- Scripts

- Create Database, Users, and Scheme

-

<div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioPostgresCreateDatabaseAndScheme</div>

- database_owner_ship provided:

- add

client_encodingandSEARCH PATH - if database does not exist, create it

- call

pqslwith- option

"-f"loading modified partitioning - option

"-a"to show all

- option

- add

create database DO $$ BEGIN IF NOT EXISTS( SELECT datname from pg_catalog.pg_database where datname = \'$PGDATABASE\' ) THEN EXECUTE \'CREATE DATABASE $PGDATABASE;\' END IF; END $$; \connect $PGDATABASE;

create roles / users DO $$ BEGIN IF NOT EXISTS( SELECT usename from pg_user where usename = \'$PG_Archiver\' ) THEN EXECUTE \'CREATE USER $PG_Archiver with PASSWORD \'$PG_Archiver_pw\'\'; EXECUTE \'ALTER USER $PG_Archiver with PASSWORD \'$PG_Archiver_pw\'\'; END IF; IF NOT EXISTS( SELECT usename from pg_user where usename = \'$PG_Reporter\' ) THEN EXECUTE \'CREATE USER $PG_Reporter with PASSWORD \'$PG_Reporter_pw\'\'; EXECUTE \'ALTER USER $PG_Reporter with PASSWORD \'$PG_Reporter_pw\'\'; END IF; END $$; -- create scheme DO $$ BEGIN IF NOT EXISTS( SELECT schema_name FROM information_schema.schemata WHERE schema_name = \'$schema\' ) THEN EXECUTE \'CREATE SCHEMA $schema\'; EXECUTE \'GRANT USAGE on SCHEMA $schema to $PGUSER\'; END IF; END $$; -- SET SEARCH_PATH TO "$schema"; EOF cd ${outputdir} && unset PGDATABASE && psql -U PG_Owner -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE% - database_owner_ship provided:

- Load Scheme

-

<div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioLoadScheme</div>

- Download

postgres_schema.txtfrom cs-studio@github - Since GSI already created the database, skip

DROP DATABASE,CREATE DATABASE - add

client_encodingand "SEARCH PATH" in front - call

pqslwith- option

"-f"loading modified schema - option

"-a"to show all

- option

export PGHOST=pgsqltest.gsi.de export PGPORT=6846 export PGDATABASE=css_ee schema=css_ee URL=$css-address/raw/$path/$file filename=$(basename $URL ) outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF

SET client_encoding = "UTF-8"; SET SEARCH_PATH TO "$schema"; EOF cd ${outputdir} && wget -N --no-check-certificate ${URL} && grep -v "DROP DATABASE" ${filename} | grep -v "CREATE DATABASE" | grep -v -w "\\connect" >> ${outputfile} && psql -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE%-

optionally: to speed up in favour of neglecting consistency, you could drop the

FOREIGN KEYconstraints of thesampletable:

%CODE{"bash"}% export PGUSER=export PGHOST=pgsqltest.gsi.de export PGPORT=6846 export PGDATABASE=css_ee schema=css_ee outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF

SET client_encoding = "UTF-8"; SET SEARCH_PATH TO "$schema"; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_status_id_fkey" CASCADE; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_severity_id_fkey" CASCADE; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_channel_id_fkey" CASCADE; EOF cd ${outputdir} && psql -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE% - Download

- Partitioning

-

NOTE: alternatively, if applicable

pg_partmanExtension to manage partitioned tables by time or ID - not yet tested <div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioPostgresPartitioning</div>- database_owner_ship provided:

- Download

postgres_partitioning.txtfrom cs-studio@github - Since the table

sampleis already created, optionally includingFOREIGN KEYconstraints,- skip

DROP TABLE IF EXISTS sample, though you will run into an error

- skip

- replace

archive.prefixes by "$schema." prefix - add

client_encodingandSEARCH PATHin front - call

pqslwith- option

"-f"loading modified partitioning - option

"-a"to show all

- option

- Download

- Note

- if

psql -U $(PG_Owner)does not work,unset PGHOST;

%CODE{"bash"}% -- This maintenance function automatically creates partitions according to the -- specified interval (e.g. weekly or monthly). The first partition starts at --and ends a day/week/month/year later. This function has to be -- called regularly (e.g. daily by cron): -- -- @daily psql -a -h pgsqltest.gsi.de -p 6846 -U <database_owner, e.g. "postgres"> -d css_ee -c "SELECT css_ee.sample_update_partitions('2015-01-01'::timestamp, 'css_ee', '<database_owner, e.g. "postgres">', 'week');" -- -- This function is based on a more generic version by Nicholas Whittier -- (http://imperialwicket.com/postgresql-automating-monthly-table-partitions). %ENDCODE% ⇒ Install a cronjob on any relevant machine using .pgpass(q.v. Psql pgwiki.gsi.de #.pgpass)-

- (german) note about the credentials

- ... da Sie vorhaben, "psql" für Ihren CronJob zu nutzen, können Sie alle relevanten PostgreSQL-Connection-Daten in Shell-Variablen hinterlegen. Im pgWiki finden Sie dazu eine Anleitung / Empfehlung von uns, wie so ein PostgreSQL-Environment aussehen könnte. Es gibt auch die Datei ".pgpass, wo Sie Passwörter für Ihre Verbindungen hinterlegen können. 1x hinterlegt, bezieht "psql" standardmäßig aus dieser Datei die passenden Passwörter. Wichtig ist nur, dass CronJob unter dem User ausgeführt wird, bei dem die Datei .pgpass hinterlegt ist.

Sie können Ihr Environment sogar soweit "vorbereiten", dass Sie sogar den DBNamen, den Ufernamen, Port, Server, etc. bei Ihrem Cron-Aufruf nicht mehr mit angeben müssen. Die Environment-Variablen geben "psql" vor, wie und wohin er sich per Standard connecten soll.

- database_owner_ship provided:

- Grants

-

<div style="text-align:right" style="font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioLoadSchemeGrants</div>

- Expect

postgres_schema.txt - Extract

GRANTcommands and for each user add those GRANTs - add

client_encodingand "SEARCH PATH" in front - call

pqslwith- option

"-f"loading modified schema - option

"-a"to show all

- option

export PGHOST=pgsqltest.gsi.de export PGPORT=6846 export PGDATABASE=css_ee schema=css_ee URL=$css-address/raw/$path/$file filename=$(basename $URL ) outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF --- --- SET client_encoding = "UTF-8"; SET SEARCH PATH TO "$schema"; EOF cd ${outputdir} && wget -N --no-check-certificate ${URL} && for item in $(echo $archive); do perl -ne 'if (/^\bGRANT\b/ ... / TO (\w+);/m) {~s/^-- .*?\n//g; ~ s/\n/ /g; ~s/;\s*/;\n/g; print;}' ${filename} | perl -ne "if (/TO archive/) {~s/TO archive/TO $item/g;print;}" >> $outputfile done for item in $(echo $report); do perl -ne 'if (/^\bGRANT\b/ ... / TO (\w+);/m) {~s/^-- .*?\n//g; ~ s/\n/ /g; ~s/;\s*/;\n/g; print;}' ${filename} | perl -ne "if (/TO report/) {~s/TO report/TO $item/g;print;}" >> $outputfile done psql -a -f $outputfile && cd - && rm -ir ${outputdir} && unset outputdir outputfile archive report %ENDCODE% - Expect

PostgreSQL hosted "locally" w/ option for NOSSL

Installation

Install

-

- Debian

fully prepared: Git Repository

%FOREACH{"url" in="https://git.gsi.de/HADES/DCS/EPICS/Services/Archiver/Postgres-Server-Configuration"}%- $url

by-hand: Server Connectivity

in:

change %CODE% listen_addresses = 'localhost' %ENDCODE% to %CODE% listen_addresses = '*' %ENDCODE%ls and add in

the data directory is set to: %CODE% data_directory = '/var/lib/postgresql/$version/main' # use data in another directory %ENDCODE% should be changed to a bigger directory: %CODE% data_directory = '/data.postgres/postgresql/$version/main' # use data in another directory %ENDCODE% %NEXT{"version"}%

/etc/postgresql/$version/main/postgresql.conf change %CODE% listen_addresses = 'localhost' %ENDCODE% to %CODE% listen_addresses = '*' %ENDCODE%ls and add in

/etc/postgresql/$version/main/pg_hba.conf

%CODE%

%FOREACH{"ip" in="140.181.75.165/18 140.181.80.125/18 140.181.93.18/18"}%

hostnossl all all $ip trust

%NEXT{"ip"}%

hostnossl all all 140.181.75.165/18 trust

hostnossl all all 140.181.80.125/18 trust

hostnossl all all 140.181.93.18/18 trust

hostnossl all archiver samehost trust

hostnossl all report samenet trust

%ENDCODE%

in: /etc/postgresql/$version/main/postgresql.conf the data directory is set to: %CODE% data_directory = '/var/lib/postgresql/$version/main' # use data in another directory %ENDCODE% should be changed to a bigger directory: %CODE% data_directory = '/data.postgres/postgresql/$version/main' # use data in another directory %ENDCODE% %NEXT{"version"}%

start/restart

%CODE{"bash"}% # service postgresql restart; service postgresql status %ENDCODE%<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioPostgresDatabaseConfigurationLocal <a href="/edit/DaqSlowControl/SCSArchiverCSStudioPostgresDatabaseConfigurationLocal">edit</a></div> %FOREACH{"css-address" in="https://github.com/ControlSystemStudio/cs-studio"}% %FOREACH{"file" in="postgres_schema.txt"}% %FOREACH{"path" in="master/applications/archive/archive-plugins/org.csstudio.archive.rdb/dbd"}% %FOREACH{"prefix" in="SCSArchiverCSStudioPostgreSql"}%

Configuration lxhaddcs10 (current)

Database Configuration - lxhaddcs10 (current)

| Hostname | lxhaddcs10 |

|

|---|---|---|

| Port | 5432 |

|

| Name | archive |

|

| schema | public |

|

| SSL | ||

| based on | CS-Studio provided postgres scheme (raw) (local) and CS-Studio provided partitioning (raw) (local) | |

Role/User/Permissions - lxhaddcs10 (current)

| Hostname Port | lxhaddcs10:5432 |

|||||

|---|---|---|---|---|---|---|

| Database | archive |

|||||

| permissions | ||||||

| user/role | read | write | admin | |||

| archiver | pw: $archive | |

|

|

||

| report | pw: $report | |

|

|

||

| postgres | superuser | |

|

|

||

Configuration Scripts - lxhaddcs10 (current)

public.archive@lxhaddcs10:5432

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioConfigurationScripts <a href="/edit/DaqSlowControl/SCSArchiverCSStudioConfigurationScripts">edit</a></div>

%NEXT{"prefix"}%

%NEXT{"path"}%

%NEXT{"file"}%

%NEXT{"css-address"}%

- Hostname

- lxhaddcs10

- Port

- 5432

- Database

- archive

- Schema

- public

- Scripts

- Create Database, Users, and Scheme

-

<div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioPostgresCreateDatabaseAndScheme</div>

- database_owner_ship provided:

- add

client_encodingandSEARCH PATH - if database does not exist, create it

- call

pqslwith- option

"-f"loading modified partitioning - option

"-a"to show all

- option

- add

create database DO $$ BEGIN IF NOT EXISTS( SELECT datname from pg_catalog.pg_database where datname = \'$PGDATABASE\' ) THEN EXECUTE \'CREATE DATABASE $PGDATABASE;\' END IF; END $$; \connect $PGDATABASE;

create roles / users DO $$ BEGIN IF NOT EXISTS( SELECT usename from pg_user where usename = \'$PG_Archiver\' ) THEN EXECUTE \'CREATE USER $PG_Archiver with PASSWORD \'$PG_Archiver_pw\'\'; EXECUTE \'ALTER USER $PG_Archiver with PASSWORD \'$PG_Archiver_pw\'\'; END IF; IF NOT EXISTS( SELECT usename from pg_user where usename = \'$PG_Reporter\' ) THEN EXECUTE \'CREATE USER $PG_Reporter with PASSWORD \'$PG_Reporter_pw\'\'; EXECUTE \'ALTER USER $PG_Reporter with PASSWORD \'$PG_Reporter_pw\'\'; END IF; END $$; -- create scheme DO $$ BEGIN IF NOT EXISTS( SELECT schema_name FROM information_schema.schemata WHERE schema_name = \'$schema\' ) THEN EXECUTE \'CREATE SCHEMA $schema\'; EXECUTE \'GRANT USAGE on SCHEMA $schema to $PGUSER\'; END IF; END $$; -- SET SEARCH_PATH TO "$schema"; EOF cd ${outputdir} && unset PGDATABASE && psql -U PG_Owner -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE% - database_owner_ship provided:

- Load Scheme

-

<div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioLoadScheme</div>

- Download

postgres_schema.txtfrom cs-studio@github - Since GSI already created the database, skip

DROP DATABASE,CREATE DATABASE - add

client_encodingand "SEARCH PATH" in front - call

pqslwith- option

"-f"loading modified schema - option

"-a"to show all

- option

export PGHOST=lxhaddcs10 export PGPORT=5432 export PGDATABASE=archive schema=public URL=$css-address/raw/$path/$file filename=$(basename $URL ) outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF

SET client_encoding = "UTF-8"; SET SEARCH_PATH TO "$schema"; EOF cd ${outputdir} && wget -N --no-check-certificate ${URL} && grep -v "DROP DATABASE" ${filename} | grep -v "CREATE DATABASE" | grep -v -w "\\connect" >> ${outputfile} && psql -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE%-

optionally: to speed up in favour of neglecting consistency, you could drop the

FOREIGN KEYconstraints of thesampletable:

%CODE{"bash"}% export PGUSER=export PGHOST=lxhaddcs10 export PGPORT=5432 export PGDATABASE=archive schema=public outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF

SET client_encoding = "UTF-8"; SET SEARCH_PATH TO "$schema"; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_status_id_fkey" CASCADE; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_severity_id_fkey" CASCADE; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_channel_id_fkey" CASCADE; EOF cd ${outputdir} && psql -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE% - Download

- Partitioning

-

NOTE: alternatively, if applicable

pg_partmanExtension to manage partitioned tables by time or ID - not yet tested <div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioPostgresPartitioning</div>- database_owner_ship provided:

- Download

postgres_partitioning.txtfrom cs-studio@github - Since the table

sampleis already created, optionally includingFOREIGN KEYconstraints,- skip

DROP TABLE IF EXISTS sample, though you will run into an error

- skip

- replace

archive.prefixes by "$schema." prefix - add

client_encodingandSEARCH PATHin front - call

pqslwith- option

"-f"loading modified partitioning - option

"-a"to show all

- option

- Download

- Note

- if

psql -U $(PG_Owner)does not work,unset PGHOST;

%CODE{"bash"}% -- This maintenance function automatically creates partitions according to the -- specified interval (e.g. weekly or monthly). The first partition starts at --and ends a day/week/month/year later. This function has to be -- called regularly (e.g. daily by cron): -- -- @daily psql -a -h lxhaddcs10 -p 5432 -U archiver -d archive -c "SELECT public.sample_update_partitions('2015-01-01'::timestamp, 'public', 'archiver', 'week');" -- -- This function is based on a more generic version by Nicholas Whittier -- (http://imperialwicket.com/postgresql-automating-monthly-table-partitions). %ENDCODE% ⇒ Install a cronjob on any relevant machine using .pgpass(q.v. Psql pgwiki.gsi.de #.pgpass)-

- (german) note about the credentials

- ... da Sie vorhaben, "psql" für Ihren CronJob zu nutzen, können Sie alle relevanten PostgreSQL-Connection-Daten in Shell-Variablen hinterlegen. Im pgWiki finden Sie dazu eine Anleitung / Empfehlung von uns, wie so ein PostgreSQL-Environment aussehen könnte. Es gibt auch die Datei ".pgpass, wo Sie Passwörter für Ihre Verbindungen hinterlegen können. 1x hinterlegt, bezieht "psql" standardmäßig aus dieser Datei die passenden Passwörter. Wichtig ist nur, dass CronJob unter dem User ausgeführt wird, bei dem die Datei .pgpass hinterlegt ist.

Sie können Ihr Environment sogar soweit "vorbereiten", dass Sie sogar den DBNamen, den Ufernamen, Port, Server, etc. bei Ihrem Cron-Aufruf nicht mehr mit angeben müssen. Die Environment-Variablen geben "psql" vor, wie und wohin er sich per Standard connecten soll.

- database_owner_ship provided:

- Grants

-

<div style="text-align:right" style="font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioLoadSchemeGrants</div>

- Expect

postgres_schema.txt - Extract

GRANTcommands and for each user add those GRANTs - add

client_encodingand "SEARCH PATH" in front - call

pqslwith- option

"-f"loading modified schema - option

"-a"to show all

- option

export PGHOST=lxhaddcs10 export PGPORT=5432 export PGDATABASE=archive schema=public URL=$css-address/raw/$path/$file filename=$(basename $URL ) outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF --- --- SET client_encoding = "UTF-8"; SET SEARCH PATH TO "$schema"; EOF cd ${outputdir} && wget -N --no-check-certificate ${URL} && for item in $(echo $archive); do perl -ne 'if (/^\bGRANT\b/ ... / TO (\w+);/m) {~s/^-- .*?\n//g; ~ s/\n/ /g; ~s/;\s*/;\n/g; print;}' ${filename} | perl -ne "if (/TO archive/) {~s/TO archive/TO $item/g;print;}" >> $outputfile done for item in $(echo $report); do perl -ne 'if (/^\bGRANT\b/ ... / TO (\w+);/m) {~s/^-- .*?\n//g; ~ s/\n/ /g; ~s/;\s*/;\n/g; print;}' ${filename} | perl -ne "if (/TO report/) {~s/TO report/TO $item/g;print;}" >> $outputfile done psql -a -f $outputfile && cd - && rm -ir ${outputdir} && unset outputdir outputfile archive report %ENDCODE% - Expect

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioPostgresDatabaseConfigurationLocal <a href="/edit/DaqSlowControl/SCSArchiverCSStudioPostgresDatabaseConfigurationLocal">edit</a></div> %FOREACH{"css-address" in="https://github.com/ControlSystemStudio/cs-studio"}% %FOREACH{"file" in="postgres_schema.txt"}% %FOREACH{"path" in="master/applications/archive/archive-plugins/org.csstudio.archive.rdb/dbd"}% %FOREACH{"prefix" in="SCSArchiverCSStudioPostgreSql"}%

Configuration lxhaddcs10 (future)

Database Configuration - lxhaddcs10 (future)

| Hostname | lxhaddcs10 |

|

|---|---|---|

| Port | 5432 |

|

| Name | archive |

|

| schema | archive |

|

| SSL | ||

| based on | CS-Studio provided postgres scheme (raw) (local) and CS-Studio provided partitioning (raw) (local) | |

Role/User/Permissions - lxhaddcs10 (future)

| Hostname Port | lxhaddcs10:5432 |

|||||

|---|---|---|---|---|---|---|

| Database | archive |

|||||

| permissions | ||||||

| user/role | read | write | admin | |||

| archiver | pw: $archive | |

|

|

||

| report | pw: $report | |

|

|

||

| postgres | superuser | |

|

|

||

Configuration Scripts - lxhaddcs10 (future)

archive.archive@lxhaddcs10:5432

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioConfigurationScripts <a href="/edit/DaqSlowControl/SCSArchiverCSStudioConfigurationScripts">edit</a></div>

%NEXT{"prefix"}%

%NEXT{"path"}%

%NEXT{"file"}%

%NEXT{"css-address"}%

- Hostname

- lxhaddcs10

- Port

- 5432

- Database

- archive

- Schema

- archive

- Scripts

- Create Database, Users, and Scheme

-

<div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioPostgresCreateDatabaseAndScheme</div>

- database_owner_ship provided:

- add

client_encodingandSEARCH PATH - if database does not exist, create it

- call

pqslwith- option

"-f"loading modified partitioning - option

"-a"to show all

- option

- add

create database DO $$ BEGIN IF NOT EXISTS( SELECT datname from pg_catalog.pg_database where datname = \'$PGDATABASE\' ) THEN EXECUTE \'CREATE DATABASE $PGDATABASE;\' END IF; END $$; \connect $PGDATABASE;

create roles / users DO $$ BEGIN IF NOT EXISTS( SELECT usename from pg_user where usename = \'$PG_Archiver\' ) THEN EXECUTE \'CREATE USER $PG_Archiver with PASSWORD \'$PG_Archiver_pw\'\'; EXECUTE \'ALTER USER $PG_Archiver with PASSWORD \'$PG_Archiver_pw\'\'; END IF; IF NOT EXISTS( SELECT usename from pg_user where usename = \'$PG_Reporter\' ) THEN EXECUTE \'CREATE USER $PG_Reporter with PASSWORD \'$PG_Reporter_pw\'\'; EXECUTE \'ALTER USER $PG_Reporter with PASSWORD \'$PG_Reporter_pw\'\'; END IF; END $$; -- create scheme DO $$ BEGIN IF NOT EXISTS( SELECT schema_name FROM information_schema.schemata WHERE schema_name = \'$schema\' ) THEN EXECUTE \'CREATE SCHEMA $schema\'; EXECUTE \'GRANT USAGE on SCHEMA $schema to $PGUSER\'; END IF; END $$; -- SET SEARCH_PATH TO "$schema"; EOF cd ${outputdir} && unset PGDATABASE && psql -U PG_Owner -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE% - database_owner_ship provided:

- Load Scheme

-

<div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioLoadScheme</div>

- Download

postgres_schema.txtfrom cs-studio@github - Since GSI already created the database, skip

DROP DATABASE,CREATE DATABASE - add

client_encodingand "SEARCH PATH" in front - call

pqslwith- option

"-f"loading modified schema - option

"-a"to show all

- option

export PGHOST=lxhaddcs10 export PGPORT=5432 export PGDATABASE=archive schema=archive URL=$css-address/raw/$path/$file filename=$(basename $URL ) outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF

SET client_encoding = "UTF-8"; SET SEARCH_PATH TO "$schema"; EOF cd ${outputdir} && wget -N --no-check-certificate ${URL} && grep -v "DROP DATABASE" ${filename} | grep -v "CREATE DATABASE" | grep -v -w "\\connect" >> ${outputfile} && psql -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE%-

optionally: to speed up in favour of neglecting consistency, you could drop the

FOREIGN KEYconstraints of thesampletable:

%CODE{"bash"}% export PGUSER=export PGHOST=lxhaddcs10 export PGPORT=5432 export PGDATABASE=archive schema=archive outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF

SET client_encoding = "UTF-8"; SET SEARCH_PATH TO "$schema"; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_status_id_fkey" CASCADE; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_severity_id_fkey" CASCADE; ALTER TABLE sample DROP CONSTRAINT IF EXISTS "sample_channel_id_fkey" CASCADE; EOF cd ${outputdir} && psql -a -f ${outputfile} && rm -ir ${outputdir} && cd - %ENDCODE% - Download

- Partitioning

-

NOTE: alternatively, if applicable

pg_partmanExtension to manage partitioned tables by time or ID - not yet tested <div style="text-align:right;font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioPostgresPartitioning</div>- database_owner_ship provided:

- Download

postgres_partitioning.txtfrom cs-studio@github - Since the table

sampleis already created, optionally includingFOREIGN KEYconstraints,- skip

DROP TABLE IF EXISTS sample, though you will run into an error

- skip

- replace

archive.prefixes by "$schema." prefix - add

client_encodingandSEARCH PATHin front - call

pqslwith- option

"-f"loading modified partitioning - option

"-a"to show all

- option

- Download

- Note

- if

psql -U $(PG_Owner)does not work,unset PGHOST;

%CODE{"bash"}% -- This maintenance function automatically creates partitions according to the -- specified interval (e.g. weekly or monthly). The first partition starts at --and ends a day/week/month/year later. This function has to be -- called regularly (e.g. daily by cron): -- -- @daily psql -a -h lxhaddcs10 -p 5432 -U postgres -d archive -c "SELECT archive.sample_update_partitions('2015-01-01'::timestamp, 'archive', 'postgres', 'week');" -- -- This function is based on a more generic version by Nicholas Whittier -- (http://imperialwicket.com/postgresql-automating-monthly-table-partitions). %ENDCODE% ⇒ Install a cronjob on any relevant machine using .pgpass(q.v. Psql pgwiki.gsi.de #.pgpass)-

- (german) note about the credentials

- ... da Sie vorhaben, "psql" für Ihren CronJob zu nutzen, können Sie alle relevanten PostgreSQL-Connection-Daten in Shell-Variablen hinterlegen. Im pgWiki finden Sie dazu eine Anleitung / Empfehlung von uns, wie so ein PostgreSQL-Environment aussehen könnte. Es gibt auch die Datei ".pgpass, wo Sie Passwörter für Ihre Verbindungen hinterlegen können. 1x hinterlegt, bezieht "psql" standardmäßig aus dieser Datei die passenden Passwörter. Wichtig ist nur, dass CronJob unter dem User ausgeführt wird, bei dem die Datei .pgpass hinterlegt ist.

Sie können Ihr Environment sogar soweit "vorbereiten", dass Sie sogar den DBNamen, den Ufernamen, Port, Server, etc. bei Ihrem Cron-Aufruf nicht mehr mit angeben müssen. Die Environment-Variablen geben "psql" vor, wie und wohin er sich per Standard connecten soll.

- database_owner_ship provided:

- Grants

-

<div style="text-align:right" style="font-size:smaller" class='TMLhtml' > SCSArchiverCSStudioLoadSchemeGrants</div>

- Expect

postgres_schema.txt - Extract

GRANTcommands and for each user add those GRANTs - add

client_encodingand "SEARCH PATH" in front - call

pqslwith- option

"-f"loading modified schema - option

"-a"to show all

- option

export PGHOST=lxhaddcs10 export PGPORT=5432 export PGDATABASE=archive schema=archive URL=$css-address/raw/$path/$file filename=$(basename $URL ) outputdir=$(mktemp -d); outputfile=$(mktemp -p ${outputdir}) ; cat > ${outputfile} << EOF --- --- SET client_encoding = "UTF-8"; SET SEARCH PATH TO "$schema"; EOF cd ${outputdir} && wget -N --no-check-certificate ${URL} && for item in $(echo $archive); do perl -ne 'if (/^\bGRANT\b/ ... / TO (\w+);/m) {~s/^-- .*?\n//g; ~ s/\n/ /g; ~s/;\s*/;\n/g; print;}' ${filename} | perl -ne "if (/TO archive/) {~s/TO archive/TO $item/g;print;}" >> $outputfile done for item in $(echo $report); do perl -ne 'if (/^\bGRANT\b/ ... / TO (\w+);/m) {~s/^-- .*?\n//g; ~ s/\n/ /g; ~s/;\s*/;\n/g; print;}' ${filename} | perl -ne "if (/TO report/) {~s/TO report/TO $item/g;print;}" >> $outputfile done psql -a -f $outputfile && cd - && rm -ir ${outputdir} && unset outputdir outputfile archive report %ENDCODE% - Expect

CS-Studio - RDB Archiver

Currently located atlxhadeb06

Requirements

- CS-Studio 4 requires at least Java 8 by Oracle. Therefore make sure you have it installed:

Sources download

Current versions can be build on its own based on the GIT hub repository of CS-Studio: Prebuilt binaries can be found at https://ics-web.sns.ornl.gov/css/updates/apps/ and/or https://controlssoftware.sns.ornl.gov/css_rcp/nightly/ Look for:- archive_config...

- archvie_engine...

- archive-config-4.1.0-linux.gtk.x86_64.zip : local copy

- archive-engine-4.1.0-linux.gtk.x86_64.zip : local copy

File locations

Install Area

-

/home/epics/EPICS/programs/css/...- directory read-only to group and others

- modifications on

<Application>.inifile to allow multiuser operation "shared configuration"/"shared install"

-

archive-config-4.1.0

%CODE{"java"}%

-Dosgi.configuration.area.default=@user.home/.css/ArchiveConfigTool_3.2.15/configuration

-Dosgi.instance.area.default=@user.home/CSS-Workspaces/ArchiveConfigTool_3.2.15/workspace

%ENDCODE%

-

archive-engine-4.1.0

%CODE{"java"}%

-Dosgi.configuration.area.default=@user.home/.css/ArchiveEngine_4.0.0/configuration

-Dosgi.instance.area.default=@user.home/CSS-Workspaces/ArchiveEngine_4.0.0/workspace

%ENDCODE%

-

/home/epics/EPICS/bin/- Caller Scripts to provide correct java version of Oracle (java version "1.8.0_66", Sun/Oracle):

%CODE{"bash"}%

#!/bin/bash

export JAVA_HOME=/home/epics/EPICS/programs/java/jdk1.8.0_66;

declare -F addToPath && addToPath $JAVA_HOME/bin || PATH=$(echo $JAVA_HOME/bin${PATH:+:$PATH} | sed -e 's/^://' -e 's/:$//')

export PATH

/home/epics/EPICS/programs/css/archive-config-4.1.0/ArchiveConfigTool $*

%ENDCODE%

%CODE{"bash"}%

#!/bin/bash

export JAVA_HOME=/home/epics/EPICS/programs/java/jdk1.8.0_66;

declare -F addToPath && addToPath $JAVA_HOME/bin || PATH=$(echo $JAVA_HOME/bin${PATH:+:$PATH} | sed -e 's/^://' -e 's/:$//')

export PATH

/home/epics/EPICS/programs/css/archive-engine-4.1.0/ArchiveEngine $*

%ENDCODE%

Configuration Area

- can be changed by runtime option

-

-configuration <location> - default

-

~/.css/ArchiveEngine_4.0.0/configuration

~/.css/ArchiveConfigTool_3.2.15/configuration

Instance Area

- can be changed by runtime option

-

-data <location> - default

-

~/CSS-Workspaces/ArchiveEngine_4.0.0/workspace

~/CSS-Workspaces/ArchiveConfigTool_3.2.15/workspace

CS-Studio - Database credentials

There are several ways to provide the database passwords (archiving (write), retrieving (read):

- every time via command line option :

-rdb_password <plain text password> - every time via pluginCustomization :

- command line option :

-pluginCustomization <file> - where <file> contains the line:

org.csstudio.archive.rdb/password=<plain text password>

- command line option :

- one time command line option :

-set_password org.csstudio.archive.rdb/password

setting secure preferences interactively and storing it insecure_store.datdepending on the settings oforg.csstudio.security/secure_preference_locationin the (pluginCustomization) settings file (c.f Section "Secure Preferences", and Configuring Authentication, Authorization and Secure Preferences of "Chapter 13. Authentication and Authorization Part I. CS-Studio Guide" ):- "Default": Use the default location, which is typically the user's home directory.

- Advantage: It's the default

- Disadvantage: You won't always know where the preferences are.

- "Instance": Use the Eclipse 'instance', i.e. the workspace.

- Advantage: You know where it is, and each workspace will have its own settings.

- Disadvantage: Each workspace has different settings.

- "Install": Use the Eclipse 'install' location, i.e. where the product is installed.

- Advantage: You know where it is, and every workspace for that product will have the same settings. Good for a "global" setup.

- Disadvantage: Ordinary users cannot (should not) have write permissions.

- "Default": Use the default location, which is typically the user's home directory.

Status

%FOREACH{"user" in="hades_scs_css_archive"}% At the moment the encrypted credentials for$user@pgsqltest.gsi.de:6846/hades_scs_css_archive are stored at the install location of the config tool or the engine respectively.

Logged with installation credentials (e.g. user epics), either of these will set it:

%CODE{"bash"}%

$> su - epics

$> . epics &&

ArchiveConfigTool -rdb_user $user -set_password org.csstudio.archive.rdb/password &&

ArchiveEngineCSS -set_password org.csstudio.archive.rdb/password

%ENDCODE%

%NEXT{"user"}%

GIT repository

Engine Configuration

- definitions

- convert old archiver "cfg" into new "XML"

- import configuration into configuration database

- list/check configurations

- goto operations

Code Repositories

CVS

- GSI Linux Cluster

-

CVSROOT - /misc/hadesprojects/slowcontrol/cvsroot

- ==Module

- Archiver.css

-

git

Directory

%CODE{"bash"}% APPS_TOP=~scs/playground/EPICS/apps #APPS_TOP=~scs/apps ARCHIVER_CSS_TOP=$APPS_TOP/Archiver.css cd $ARCHIVER_CSS_TOP/configuration %ENDCODE%Converting old archiver 'cfg' into new 'xml' configuration files

Theconfiguration directory of the css archiver at $APPS_TOP/Archiver/css/configuration/old contains as a starting point the copy of the archiver configuration of the old archiver.

By using the old/ConvertEngineConfig.pl script (which is a copy of the latest old archiver tool set) you could generate an xml configuration file:

%CODE{"bash"}%

ConvertEngineConfig.pl -v -d old/engineconfig.dtd -o hadesArchive.xml hadesArchive.cfg

%ENDCODE%

or alternatively the wrapper script convert.sh which cares about existing files

%CODE{"bash"}%

old/convert.sh [[input:default=hadesArchive.cfg] output]

%ENDCODE%

Import / Modify Engine Configuration Database

The XML output file of the conversion is used byArchiveConfigTool to generate/modify the configuration of the archive engine, which is also stored in the database. -

pluginCustomization

pluginCustomization - to ease common rdb a plugin customization settings file is used, see plugin customization

hades_scs_css_archive

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioEngineConfiguration <a href="/edit/DaqSlowControl/SCSArchiverCSStudioEngineConfiguration">edit</a></div>

%CODE{"bash"}%

pluginCustomization=$ARCHIVER_CSS_TOP/pluginCustomization/settings_hades_scs_css_archive.ini

engine_configuration_file=hadesArchive_css.xml

engine_name=HadesArchiveEngine

engine_port=4912

engine_description=HADES_Archiver

engine_replace=yes

engine_steal_channels=yes

[ $engine_replace == "yes" ] && engine_replace=-replace_engine || engine_replace=""

[ $engine_steal_channels == "yes" ] && engine_steal_channels=-steal_channels || engine_steal_channels=""

#import

cd $ARCHIVER_CSS_TOP/configuration && [ -f $pluginCustomization ] && [ -f "$engine_configuration_file" ] &&

ArchiveConfigTool -pluginCustomization $pluginCustomization -import -engine $engine_name -port $engine_port -config $engine_configuration_file -description $engine_description $engine_replace

%ENDCODE%

lxhadeb05_archive

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioEngineConfiguration <a href="/edit/DaqSlowControl/SCSArchiverCSStudioEngineConfiguration">edit</a></div>

%CODE{"bash"}%

pluginCustomization=$ARCHIVER_CSS_TOP/pluginCustomization/settings_lxhadeb05_archive.ini

engine_configuration_file=hadesArchive_css.xml

engine_name=HadesArchiveEngine

engine_port=4912

engine_description=HADES_Archiver

engine_replace=yes

engine_steal_channels=yes

[ $engine_replace == "yes" ] && engine_replace=-replace_engine || engine_replace=""

[ $engine_steal_channels == "yes" ] && engine_steal_channels=-steal_channels || engine_steal_channels=""

#import

cd $ARCHIVER_CSS_TOP/configuration && [ -f $pluginCustomization ] && [ -f "$engine_configuration_file" ] &&

ArchiveConfigTool -pluginCustomization $pluginCustomization -import -engine $engine_name -port $engine_port -config $engine_configuration_file -description $engine_description $engine_replace

%ENDCODE%

lxhaddcs10_archive

<div style="text-align:right;font-size:smaller">SCSArchiverCSStudioEngineConfiguration <a href="/edit/DaqSlowControl/SCSArchiverCSStudioEngineConfiguration">edit</a></div>

%CODE{"bash"}%

pluginCustomization=$ARCHIVER_CSS_TOP/pluginCustomization/settings_lxhaddcs10_archive.ini

engine_configuration_file=hadesArchive_css.xml

engine_name=HadesArchiveEngine

engine_port=4912

engine_description=HADES_Archiver

engine_replace=yes

engine_steal_channels=yes

[ $engine_replace == "yes" ] && engine_replace=-replace_engine || engine_replace=""

[ $engine_steal_channels == "yes" ] && engine_steal_channels=-steal_channels || engine_steal_channels=""

#import

cd $ARCHIVER_CSS_TOP/configuration && [ -f $pluginCustomization ] && [ -f "$engine_configuration_file" ] &&

ArchiveConfigTool -pluginCustomization $pluginCustomization -import -engine $engine_name -port $engine_port -config $engine_configuration_file -description $engine_description $engine_replace

%ENDCODE%

List existing engine configurations

%CODE{"bash"}%

pluginCustomization=$ARCHIVER_CSS_TOP/pluginCustomization/settings_hades_scs_css_archive.ini

ArchiveConfigTool -pluginCustomization $pluginCustomization -list

%ENDCODE%

Operation

Start Engine

by Hand

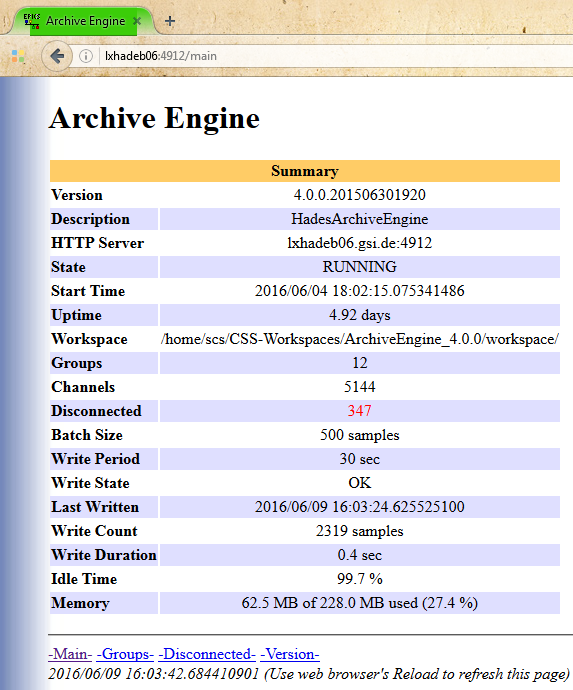

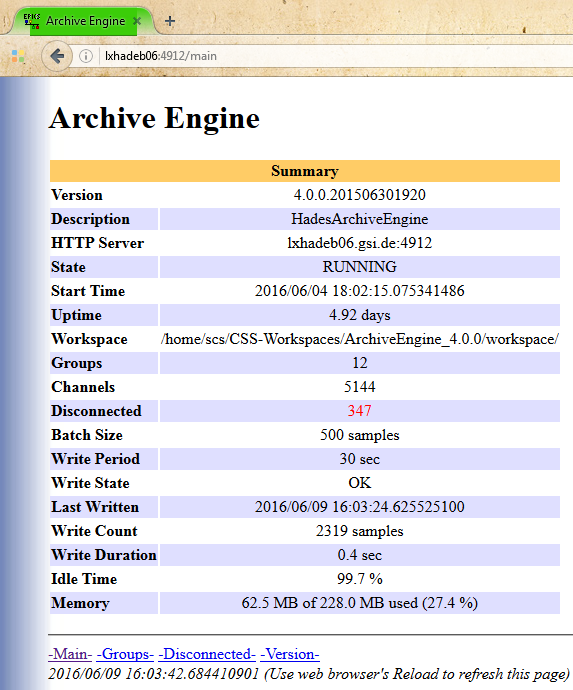

%CODE{"perl"}% engine_name=HadesArchiveEngine engine_port=4912 pluginCustomization=$ARCHIVER_CSS_TOP/pluginCustomization/settings_hades_scs_css_archive.ini ArchiveEngineCSS -port $engine_port -engine $engine_name -data workspace/mdc -pluginCustomization $pluginCustomization %ENDCODE% A running Engine will have an HTTP server at e.g. http://localhost:4912/main an will look like this:

using crontab / procServ

On the basis of the "by Hand" a suitable cronjob is set up: At~scs/procServ the file archiverCSS.sh handles the configuration of the start routine.

Its function userDefines holds the important informations, change it if necessary, and update it to the code revision.

%CODE{"bash"}%

#specific user predefines

#the userDefines function is called within the $startBackgroundEpicsIocScriptName script

userDefines()

{

userProcServTelnetPort=4853

userProcServTelnetLogPort=-1

userIOC=archiverCSS-BEAUTY-$(hostname)

# userWorkingDir=~/apps/Archiver/css/startup

userWorkingDir=~/playground/EPICS/apps/Archiver.css/startup

userCommandExecutable=startEngineCSS.sh

userCommandArgument=

%ENDCODE%

crontab -l should contain the following line of code:

%CODE{"bash"}%

@reboot cd ~scs/procServ && ./archiverCSS.sh > ~/log/archiverCSS.crontab.start 2>&1

%ENDCODE%

With those scripts a procServ session with port 4853 is created, which can be locally reached and manipulated using telnet (see procServ man pages):

%CODE{"bash"}%

telnet localhost 4813

%ENDCODE%

Restarting Engine

via web browser

The RDB Channel Archiver offers the opportunity to restart the engine by simple calling its restart web page:http://localhost:4912/restart

script based using curl

Download this small bash script https://git.gsi.de/EPICS/Tools/scripts/Archiver/restartChannelArchiverArchiveEngine

via procServ - "brute force"

Login to the procServ session and kill/restart the child process with^X.

Attach CSS Browser

lxhaddcs10

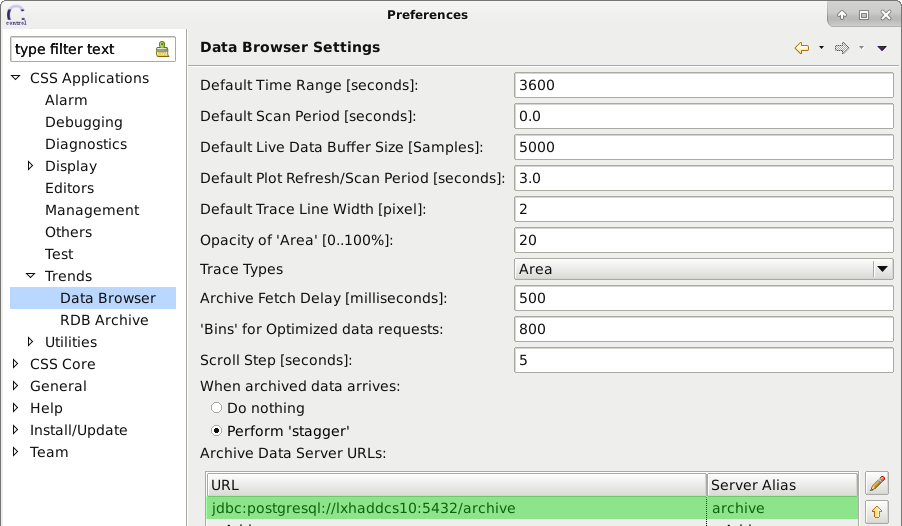

Settings needed in CSS preferences (restart needed) Just look at the line starting withjdbc: - Archiver preference settings 1:

-

URL: jdbc:postgresql://lxhaddcs10:5432/archive

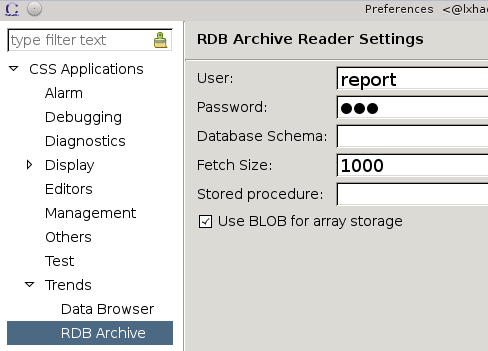

- Archiver preference settings 2:

- User: report

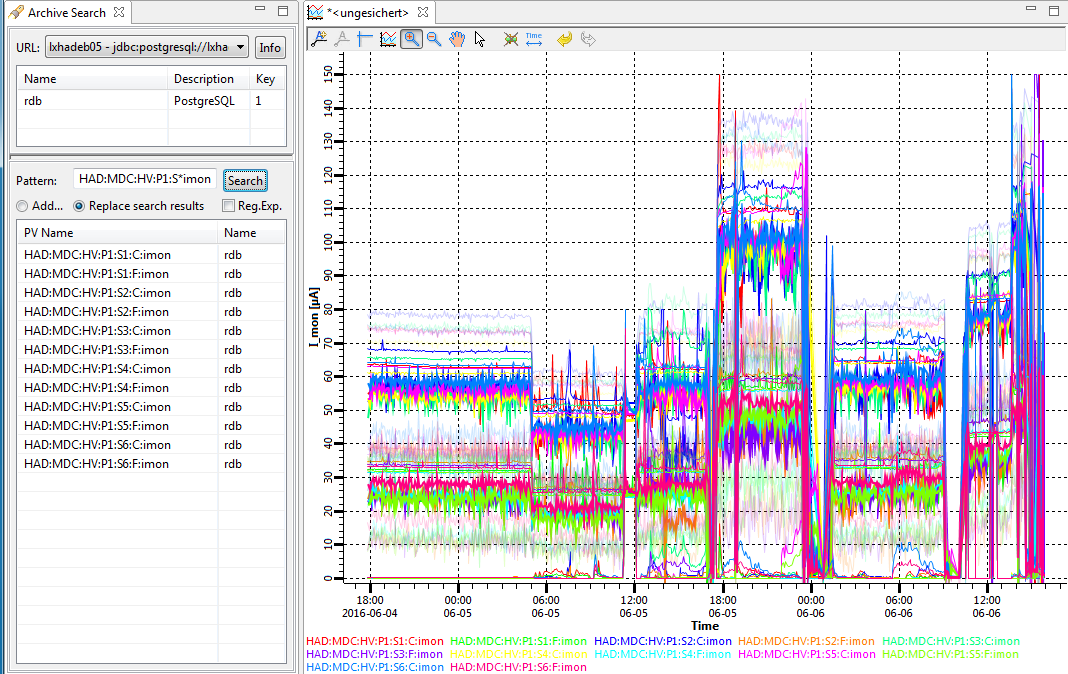

- Archiver CSS Browser:

Appendix:

pluginCustomization

with e.g. ~scs/playground/EPICS/apps/Archiver/css/pluginCustomization/settings_hades_scs_css_archive.ini %CODE{"perl"}% org.csstudio.archive.rdb/url=jdbc:postgresql://pgsqltest.gsi.de:6846/hades_scs_css_archive org.csstudio.archive.rdb/user=hades_scs_css_archive org.csstudio.archive.rdb/schema= org.csstudio.security/secure_preference_location=Instance %ENDCODE% %NEXT{"css-address"}%-- PeterZumbruch - 16 Jan 2018

-- PeterZumbruch - 2022-11-16

- cs-studio-sns-css-4.1.1: caller script for css

| I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

ArchiveConfigTool | manage | 287 bytes | 2016-06-17 - 13:41 | PeterZumbruch | caller script for ArchiveConfig |

| |

ArchiveEngineCSS | manage | 284 bytes | 2016-06-17 - 13:42 | PeterZumbruch | caller script for ArchiveEngine |

| |

ArchiveEngineWebInterface.png | manage | 125 K | 2016-06-09 - 16:09 | PeterZumbruch | Archive Engine Web Interface |

| |

Archiver_Example.png | manage | 415 K | 2016-06-06 - 15:54 | PeterZumbruch | Archiver CSS Browser |

| |

Archiver_preferences_settings_1.png | manage | 86 K | 2021-04-26 - 10:42 | PeterZumbruch | Archiver preference settings 1 |

| |

Archiver_preferences_settings_2.png | manage | 29 K | 2016-06-06 - 15:41 | PeterZumbruch | Archiver preference settings 2 |

| |

archive-config-4.1.0-linux.gtk.x86_64.zip | manage | 23 MB | 2016-06-14 - 10:08 | PeterZumbruch | archive-config-4.1.0-linux.gtk.x86_64.zip |

| |

archive-engine-4.1.0-linux.gtk.x86_64.zip | manage | 26 MB | 2016-06-14 - 10:09 | PeterZumbruch | archive-engine-4.1.0-linux.gtk.x86_64.zip |

| |

cs-studio-sns-css-4.1.1 | manage | 274 bytes | 2016-06-17 - 13:43 | PeterZumbruch | caller script for css |

| |

postgres_partitioning.txt | manage | 6 K | 2016-05-09 - 14:16 | PeterZumbruch | postgres_partitioning |

| |

postgres_schema.txt | manage | 9 K | 2016-04-18 - 16:39 | PeterZumbruch | cs-studio postgres archive schema |

Edit | Attach | Print version | History: r68 < r67 < r66 < r65 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r68 - 2022-11-16, PeterZumbruch

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Ideas, requests, problems regarding Foswiki Send feedback | Imprint | Privacy Policy (in German)

Ideas, requests, problems regarding Foswiki Send feedback | Imprint | Privacy Policy (in German)